א סטודענט אין מערילענד איז ארעסטירט געווארן נאך וואס א קעמרע געפירט מיט עי איי האט געמיינט אויף א בעג אז ס'איז א גאן

A 16-year-old Maryland student was arrested after an artificial intelligence security system falsely identified an empty Doritos bag as a firearm, triggering an armed police response at Kenwood High School in Baltimore.

Bodycam footage from the incident shows officers confronting and handcuffing student-athlete Taki Allen after the AI system flagged what it believed to be a weapon. Allen said he had just finished football practice and was eating a bag of Doritos when the alert was issued. Moments later, police arrived, ordered him to the ground, and conducted a search—only to discover an empty snack bag in his pocket.

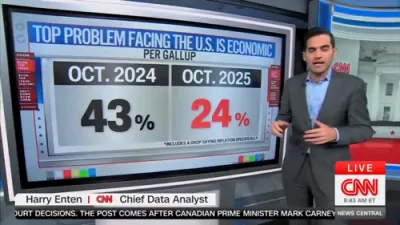

The embarrassing mix-up highlights growing concerns over the reliability of AI-based surveillance systems being adopted in schools across the United States. Designed to detect potential threats and prevent shootings, these systems have increasingly faced criticism for false positives, bias in detection algorithms, and the emotional toll such incidents have on students.

While the Baltimore County Police Department confirmed that no weapon was found and Allen was released without charges, the incident has sparked a debate over whether technology designed to “keep students safe” is instead fostering a culture of fear and mistrust.

Critics argue that the rush to deploy artificial intelligence in law enforcement and education—without adequate oversight—risks replacing sound human judgment with flawed automation. Proponents maintain that these tools, if refined, could one day prevent real tragedies.

For now, the case of a Doritos bag mistaken for a firearm serves as a sobering reminder: artificial intelligence may be powerful, but it still lacks common sense.