מאסק זאגט אז עי איי סעטעלייטס וועלן שניידן די קאסטן אין האלב

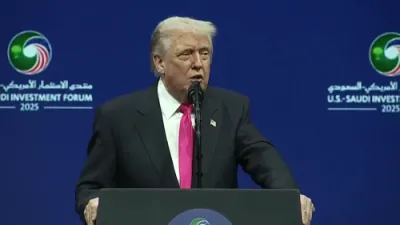

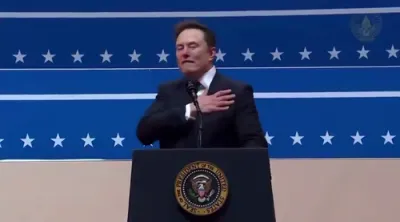

Elon Musk set off a major discussion about the future of AI energy consumption during a panel at the U.S.–Saudi Investment Forum on November 19, 2025, predicting that space will soon become the most cost-effective location for large-scale artificial intelligence computing. Musk said that within four to five years, solar-powered AI satellites in low-Earth orbit will provide cheaper compute than anything that can be built on the ground, even before the world begins to run into hard energy limits.

Musk explained that as AI demand continues to skyrocket, the Earth-based energy infrastructure will struggle to keep pace. Projections estimate that AI systems could require around 300 gigawatts of power annually—roughly two-thirds of all electricity currently consumed in the United States. By placing compute in orbit, powered directly by uninterrupted sunlight and unaffected by terrestrial cooling constraints, Musk believes SpaceX’s Starlink architecture could deliver unprecedented efficiency. He described orbital AI stations as an inevitable step in solving the coming crunch in global compute capacity.

NVIDIA CEO Jensen Huang supported Musk’s core argument by illustrating the immense inefficiency of today’s supercomputers. According to Huang, each cutting-edge rack weighs approximately two tons, and 98% of that mass—nearly 1.95 tons—is dedicated solely to cooling equipment, not actual computing power. He said that new designs, especially NVIDIA’s emerging GB300 chip systems, will shrink these supercomputers dramatically, reducing both weight and energy demands while increasing performance.

Together, Musk and Huang painted a picture of an AI industry on the brink of a fundamental transformation—driven by soaring energy needs, breakthrough chip architecture, and the promise of orbital compute platforms. Their shared message was clear: as AI accelerates, the world must rethink not only where computation happens, but how energy should be produced, delivered, and conserved to keep the future of intelligence running.